For highly available environments, extending the network between two locations has been common practice for years. For example, if you have an HA SAN that is synchronously mirroring between two locations, often the VMware/Hyper-V cluster will also be extended between those two locations. That means that VMs can migrate between the two locations with vMotion/Live Migration, or be automatically recovered from a server failure with VMware HA/Hyper-V FC.

Stretch Layer 2 Networks and Unhappy Network Admins

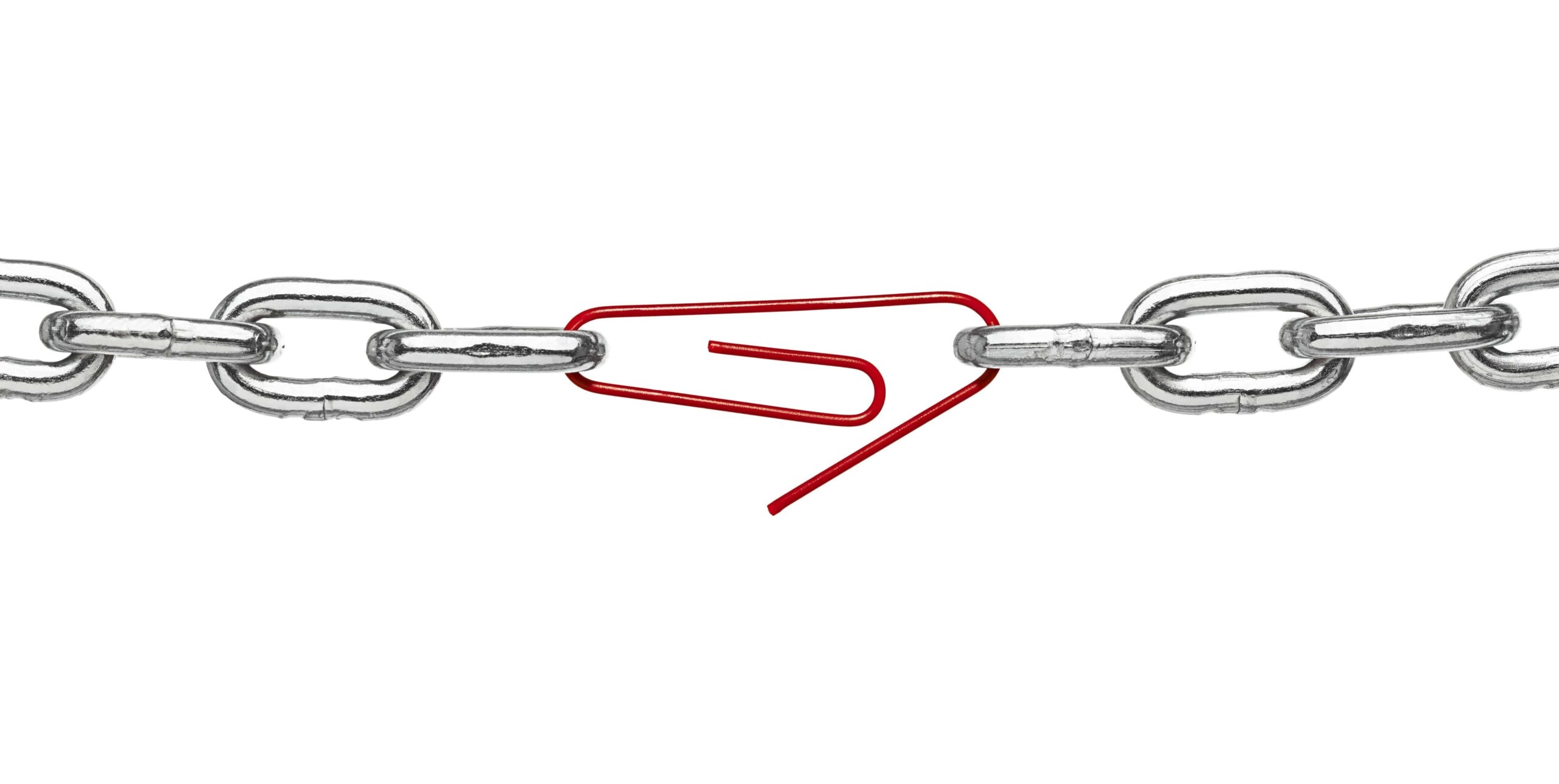

However, this creates the problem of solving how the VMs’ IP could move between the two locations. The logical answer was always to extend the network over Layer 2, but networking teams usually HATE that. If the two locations are two buildings on the same campus, they will sometimes accept it, but when they have to go between multiple sites, or — heaven forbid — cities, it incites networking riots.

Networking-wise, it can be a management nightmare. Without careful planning and manual prioritization, when Spanning Tree is stretched between two sites, it can introduce some very inefficient paths that can cause some real oddities with connectivity. Further, STP will always disable A link somewhere, which can mean that only half the available bandwidth can be used. On top of all that, those links that we’re basically treating as really long Ethernet cables traverse over a series of leased lines and switches/routers, which makes them behave differently than if they truly were just long CAT6.

But Stretch Layer 2 Can Give You More Flexibility (See What We Did There?)

For the server teams, it’s an obvious solution: the servers need to go between the two sites, the further away the better, it gives us MUCH more availability, and it’s sensible. iSCSI makes it to where the storage team can even stretch a synchronously mirroring SAN without having to get dedicated dark fiber … even better!

So, what’s the fix? Well, it depends on who you ask, of course.

VXLAN or NVGRE

From a networking standpoint, VXLAN or NVGRE both offer options for fixing this problem. VXLAN and NVGRE are (kind of) competing technologies that allow for Layer 2 networks to be extended over a Layer 3 (routed) network. That means you can have two separate sites, which are both running their own disparate switching infrastructure, which can both have the same subnet exist between them without having to deal with a Layer 2 link between them. That also means that rather than having to pay for a dedicated point-to-point network connection (or a WAN link that acts like a dedicated point to point), I can technically use some fast internet pipes and simply tunnel it across. From a networking side, it gives me much more control over where my data flows and how it gets to the other side, while allowing me to treat the two as separate entities.

Living with Stretch Layer 2

The server team usually just wants the network at both sides, and doesn’t necessarily care about how it happens if it’s low latency, fast, and doesn’t mess with the MTU. From their perspective, stretch Layer 2 works great. VXLAN/NVGRE can both add potential troubleshooting hurdles of latency, routing, and other issues as potential bottlenecks. This is one of the reasons that with VMware NSX or Hyper-V virtual switching, VXLAN/NVGRE can be included inside the hypervisor itself, taking a lot of the control of performance back to the server side. However, both of those hypervisor networking technologies are very complicated to implement and take a lot of time and effort.

Again, what’s the fix?

Compromise!

Whether it’s a stretch Layer 2, or it’s a VXLAN/NVGRE, there are some inherent inefficiencies with the design. For example, excluding some exotic protocols, the gateway for the stretched subnet can only live on one side or another. That means if you’re in site B and your gateway is in site A, you have to cross the link before you go to your gateway, which is less efficient. Broadcast traffic is also dispersed across the two networks on both sides. VXLAN makes networking life easier and potentially easier to troubleshoot, whereas stretch Layer 2 has less complexity for server teams to troubleshoot.

With a stretch Layer 2, the link between the two sites (often 10 Gbit) can plug right into the switches, which allows for a very simple design. With VXLAN/NVGRE, multiple links can be used and disparate paths can be available, but normally the only devices that support it are the firewall/router. Keep this connectivity point in mind while reaching a compromise. Switches rarely have trouble handling high bandwidth, but routers/firewalls are often designed for substantially less. Make sure the endpoint of the VXLAN/NVGRE connection can handle the extra/excessive bandwidth load.

In the end, neither option is wrong or right. They both have pros and cons. The business availability gains of having the same subnet available in both locations for server traffic normally means that the strife of either option will be worth the effort. Careful work needs to be performed with both teams, however, to design the best solution for each different environment.