Flash storage has evolved over the years and the specific improvements (and inevitable tradeoffs) have not been very transparent to end users. Specifically, flash performance, density and lifecycle changed a lot and introduced some variety into the market. Typically speed and capacity are the major focuses when evaluating flash storage options, and therefore are what mostly drive the cost.

Enterprise storage scenarios typically demand a lot of performance and require a certain level of lifespan whereas consumer-grade drives can cut some corners. In this article, we will break down the progression of NAND technology in storage so you can understand the different use cases, namely for workstations versus servers, and what you need those machines to do.

What is NAND?

NAND is a group of cells that we write bits to in order to save information. Its name is a contraction (surprisingly not an acronym!) of “NOT AND,” which comes from how the programming of the technology works. Over the years, with NAND, we increased number of bits we write to a single cell in order to achieve higher density and drive the price down. In other words, more bits on the same cell means we can double or triple the density and get more for less.

Types of NAND

SLC

When solid state drives first came out, we used Single Layer Cell (SLC). This means that NAND was writing one bit per cell. This will make more sense later when we get to the other iterations of NAND, but SLC has a flat, single layer of bits on the cell.

SLC had a lot of performance and durability since it was a one-to-one ratio. A read-write cycle of SLC is about 100,000 lifecycles, which we now see as a very long lifespan in the world of flash. The drawback of SLC is that it was quite costly to manufacture. With its one-to-one ratio, you needed to make more of it to achieve a reasonable capacity. Yes, it was fast and more dependable, but it didn’t scale well.

When flash was new and SLC was the main NAND technology it was very expensive, and you rarely saw drives over a couple hundred gigabytes. In today’s world, SLC isn’t practical and it’s unlikely you’ll find true SLC in your storage.

MLC

Engineers, in their way of making things better, wanted to improve the density. Meet the Multi Level Cell (MLC), which allows two bits per cell, doubling what SLC could handle.

However, it only has 10,000 lifecycles. Yes, it’s double the density of SLC, but lasts 10 percent as long on average. That seems like a big tradeoff. However, it effectively cut the cost of flash in half because it doubled the density. You need half the number of cells in your storage to accomplish the capacity you need. This technology brought flash into the mainstream.

TLC

Three Level Cell (TLC) saves three bits on a cell, just as the MLC does two. You will experience a direct negative relationship between density and lifecycles again. It handles three times the cells as SLC but will experience (on average) a 95 percent dropoff in lifecycle as compared to its SLC ancestor. TLC also introduced 3D NAND, which allows us to write vertically on the cell. We won’t go too deep into this, but it’s good to know because it was an important shift in the industry and helped preserve some life in the cells.

3D NAND adds layers, which can drastically increase density. The first 3D NAND that came out was a 32-layer chip but now you can get them at 64 layers.

Most of your major production drives out there today are TLC technology.

QLC

Quad Level Cell (QLC) — lo and behold — writes four bits of data per cell. It’s much newer and rarer right now, but you can find it in production. It goes up to 96 layers (with some manufacturers) but can only last about 1,000 lifecycles. For those still keeping score, we’re now at one percent of where we started with SLC. For reference, the Intel 66P is one of the first mass-produced QLC drives.

You Never Mentioned Performance, Though

Right. As you may have assumed from seeing the negative relationship between density and lifecycles, the same happens with performance. However, it’s hard to show good comparative numbers because you can’t compare apples to apples. (No one SSD was made in all four NAND types, so measuring performance equally is impossible.)

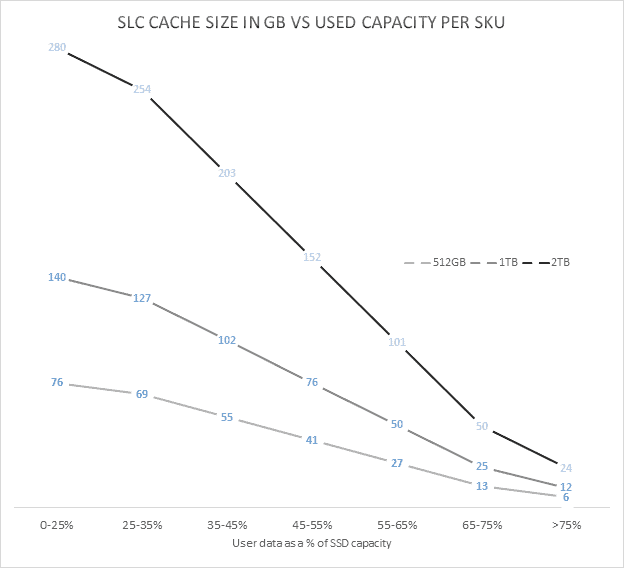

Regardless, knowing that TLC and QLC have a significant performance degradation, manufacturers added in an SLC cache. When drives have a static SLC cache partitioned out, all data flows through the SLC part first and the data itself gets stored on the rest of the drive. The reserved cache never changes at the minimum level, but can dynamically expand depending on available space. This is called variable-dynamic SLC cache. Due to this, the drive fills up the SLC portion first and then moves to the rest of the drive, continually adding cache, and then slowly scaling down how much of it functions as SLC (which is more performant).

Above you can see that as the capacity fills up, the cache size decreases. This is for the aforementioned Intel 660P QLC drive. Your mileage may vary with other drives but you can expect this general trend.

Application of NAND

Today TLC and QLC NAND have their particular applications. TLC drives are much better designed for primary datasets like databases or anything else that’s read-write heavy. You want your servers to have MLC/TLC drives. QLC is better used for archive or “cheap and deep” storage. If you have end users who aren’t what we’d consider “power users”, QLC drives are just fine for their workstations.

Note that the numbers we cited above are through making assumptions that all three types of NAND were manufactured the same, which in real life is not quite accurate. Additionally, there other variables of manufacturing and programming that impact density, performance, durability and cost.

Not all SSDs are created equal, so remember that when you’re looking at new computers, servers or storage. Price shouldn’t be your only driving factor.